AI from an investor's perspective.

Part I: Is AI a bubble? Are AI valuations nuts?

AI is everywhere these days, and in some ways is hype. Even the number of LinkedIn posts about AI is currently in bubble territory.

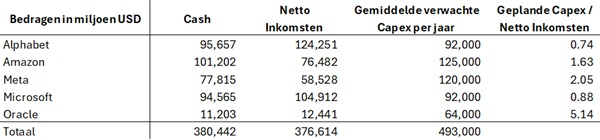

Unlike the SPAC, Weed or Meme bubble, however, the AI hype is underpinned by real investments: Meta is currently building a data center the size of Manhattan. And while there may be some circularity in the commitments of OpenAI, Nvidia and Oracle, the bulk of the investments are indeed supported by real cash flows at most of the big tech players.

Only Oracle's investments raise some eyebrows, with planned investments looking very high compared to the current level of revenue....

As for valuations, they are of course substantial. For example, Nvidia quotes a forward price/earnings of a hefty 38.4x. And that while the revenue model of the major AI players remains somewhat of an issue. But more on that later this week, in a subsequent piece. Still, we don't follow the most dramatic headlines: Insane we wouldn't call the current valuations (yet?): At the height of the dotcom bubble in 1999, Microsoft listed at a P/E of 71x, and Cisco even listed at 171x earnings.

So AI certainly has some hype. But we should not call it a real bubble for the time being....

Part II: Divergence or winner takes all?

The current building frenzy and struggle for ever larger data centers may give the impression that the AI market is becoming a "winner takes all" model. A bit like Google has a 90% market share over search. Where network effects ensure that each subsequent search gets better.

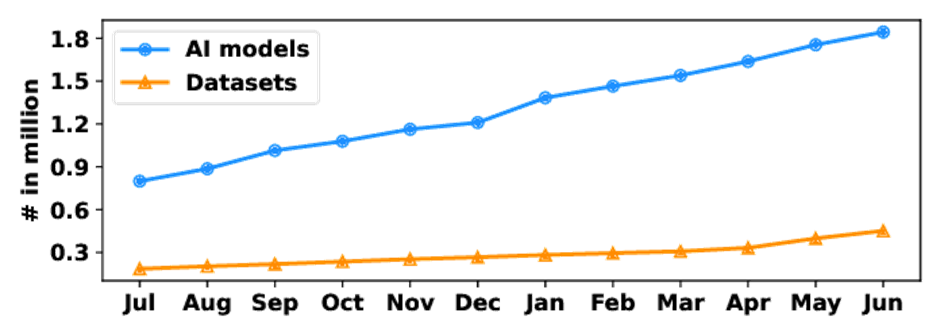

With AI, however, that is not the case for now: as Deepseek demonstrated last year, it is indeed possible to create a model with a relatively limited budget that is not much behind the industry leaders. Moreover, there is also - certainly from China - a solid push toward open source models. As a result, more than 2 million different AI models already exist by now.

It is still early in the AI battle. And time will tell. But it is a striking observation that on the one hand there is solid investment in data centers - as you would expect in a winner-takes-all market - while on the other hand the number of models and their usage suggests rather the opposite. Moreover, first-mover OpenAI is currently losing solid market share....

Beyond ChatGPT

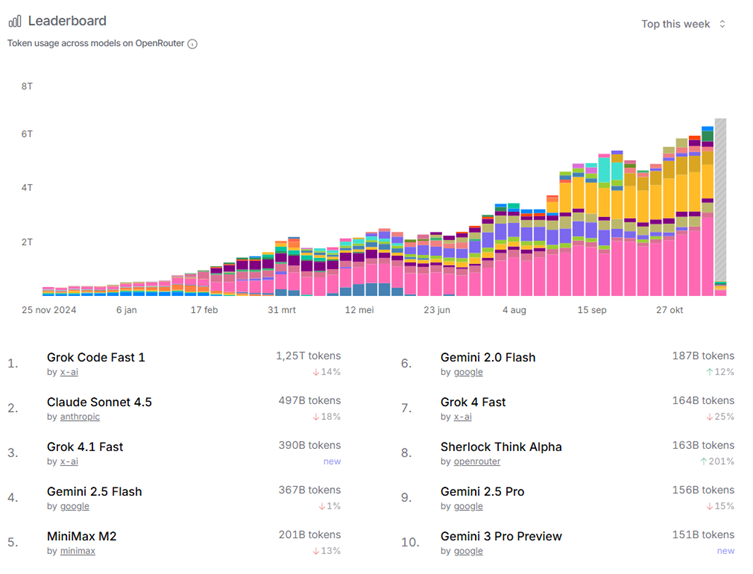

When it comes to AI, the popular press keeps talking mostly about OpenAI. In reality, OpenAI remains popular with mainstream consumers, but Sam Altman's company is losing solid ground in the professional market.

While as a consumer you have your personal AI assistant in your pocket for a monthly subscription virtually unlimited, a professional party pays based on your usage, with a price per 'token' used. Platforms like OpenRouter offer the ability to shift very flexibly between the different models, and are therefore a useful proxy to see which models are used the most. Below is the table of tokens used across models.

Promotional offers and free versions allowed Elon Musk's Grok to take 2 podium spots. After that, Gemini and Anthropic's Claude are doing particularly well. ChatGPT no longer has any models in the top 10... Is there a winner's curse with AI models, too?

Part III: Over Capacity

In a previous post, we talked about the huge investments in AI. In the coming years, the "big 5" tech players will spend up to more than 500 billion a year on capex to build data centers. These are amounts we can barely even imagine. For reference, that's about as much as the entire European agricultural sector. Or about 20x the global annual sales of McDonalds, should that make it concrete....

It raises questions about whether this is not going to cause massive overcapacity in the long run. Especially considering a lot of 'smaller models' with more limited computing power still manage to achieve 80% of the level of the best models. And can be 'good enough' for many tasks.

Personally, we get a bit of "submarine cable boom" vibes from the early 2000s. Where the Internet was about to take over the world, and a huge overbuild of overseas Internet cables was built. In the end, most of the investments ended up somewhere near the cables: deep underwater. But the infrastructure built then did allow the Internet to develop over the next few decades into what it is today.

Are today's AI players the new undersea cable companies? Will the construction frenzy soon cause overcapacity & plummeting prices? In that case, as an investor it is better to stay on the sidelines. But as a user, you can take great pleasure from such a situation. What do you think?

Part IV: AI as StockPicker

There are more than 50,000 publicly traded companies worldwide. As an analyst, it is therefore impossible to get to know them all in detail. For this reason, among others, computer models have been well established for decades in making stock selections: ratios and valuation measures are calculated on the basis of financial figures, and an initial filtering is done.

The still ample list of companies that survive this screening is flagged as "potentially interesting. After which the analyst begins to do his homework. As Warren Buffett puts it: you start at companies with the letter A, then to those starting with a B, and so on....

The disadvantage of this approach, on the one hand, is that the most interesting info is often not in the numbers themselves. But rather in the notes, the outlook, the guidance formulated by management, and info that provides the hard numbers with the necessary context. Until recently, this was only possible by reading carefully through all the company documents with the necessary discipline. And the most common decision made in a stock analysis is not to invest in it in the end: for each company that is finally considered worthy of investment, an analyst often looks at 10 on which the conclusion is "njet.

Enter Large Language Models (LLM): these are just cut to go through large amounts of text, and have it summarized. Or look for inconsistencies, changes from quarter to quarter, and the like more. So by making clever use of (well-trained) AI analysts, the filtering aspect can be extended, and allow screening on text in addition to numbers. To start flagging the "potentially most interesting companies" that way already.

Of course, it remains necessary for the human analyst to then do his homework with the necessary knowledge and discipline. Simply asking ChatGPT what would be an interesting investment and blindly following that advice is obviously not a good idea. But using a well-trained AI to screen documents, making the list of companies that you as an analyst dive deeper into as relevant and interesting as possible, helps stock analysts increase the hit rate.

Part V: How West Flanders money laid the cornerstone of the AI revolution.

One Ray Kurzweil predicted back in 1990 that, if computing power continues to improve at the same rate, we will be at the point where Artificial General Intelligence (AGI) is achieved in 2029. The remarkable thing is that in 2025, we are still on track to reach that goal. So just hold on for a few more years! In that respect, it is not surprising that the big tech players are throwing big budgets toward AI: we can almost touch the holy grail.

For West Flanders investors with a good memory, the name Kurzweil may still ring a bell. In 1998 and in full dotcom bubble, his firm, Kurzweil Applied Intelligence, was bought for USD 53 million cash by ... Lernout & Houspie.

When L&H went under, the man sat with a nice chunk of cash and full of passion for AI-avant-la-lettre. He spent his time wisely, founding the Singularity Summit in 2006, along with Peter Thiel and others. It was at that Singularity Summit that Demis Hassabis, the founder of Deep Mind, met Peter Thiel. And thus managed to secure the necessary funding for his little AI company from Peter Thiel and ... Elon Musk, among others.

Deep Mind was bought by Google in 2014 for a sloppy 500 million USD. Although he passed along at the box office, Elon Musk was not amused with this. He tried to make a counter-offer, but it was rejected by Deep Mind. As a countermove, he then started a new company, aiming to develop AI as open source software. Called ... OpenAI. The rest is (a further tumultuous) history.

But with the necessary nuance and editorial license, we can thus state (loosely) that the West Flanders L&H investor was in part at the cradle of what eventually became the current AI wave....

Authors: Jens Verbrugge, Tibo Dewispelaere, Wouter Verlinden